Listify – Directory & Business Listing WordPress Theme

LIVE PREVIEWBUY FOR $69 This won’t be the first time you look for

Empower Your Online Success with Docuneeds

Let’s achieve your goals together!

Captivating websites that combine stunning visuals with seamless functionality.

Customized web development solutions tailored to your business needs, ensuring a seamless user experience and optimal performance.

Strategic campaigns to boost brand awareness, engage your audience, and drive traffic.

Complete management of your social media profiles, including content creation and posting.

Robust and user-friendly platforms to launch and manage successful online stores.

Dedicated support and assistance to address any technical issues, provide guidance, and ensure a smooth and hassle-free experience.

Our portfolio showcases our successful projects, highlighting our expertise in delivering outstanding results for our clients.

Our valued customers are at the heart of everything we do. We strive to exceed their expectations and foster long-lasting relationships.

Stay up-to-date with our latest blog post, where we share valuable insights, tips, and trends in the digital marketing industry.

LIVE PREVIEWBUY FOR $69 This won’t be the first time you look for

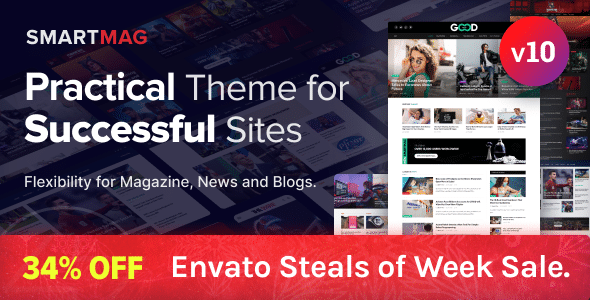

LIVE PREVIEWBUY FOR $39 Extremely Flexible and Powerful WordPress theme empowering Modern &

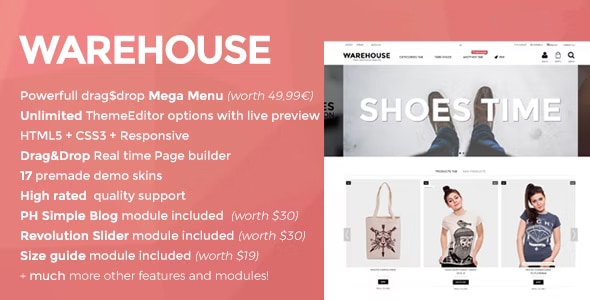

LIVE PREVIEWBUY FOR $105 Warehouse is a clean, powerfull HTML5 responsive

Ready to kickstart your digital success? Let’s collaborate on a project that takes your business to new heights!

Docuneeds Digital Marketing: Elevate your business with our cutting-edge strategies and expert solutions in the ever-evolving digital world.

©2016-2023. Docuneeds Digital Solutions. All rights reserved.

Signup now and receive an email once! Publish new content